Despite many distractions and just plain lethargy, work still progresses at a slow pace on the FIRST Robotics Competition C++ learning framework that I introduced

previously. I still think that it can provide an accessible and engaging experience for students learning robotics and programming in a way that will teach them how to contribute to the code on a real FRC robot. The RedBot still

exists (though it's now sold with a black chassis), and the code that I'm writing to emulate WPILib for it is still

here on GitHub. Now that it covers more of the WPILib API, I do want to do some major refactoring to improve the organization and modernize the C++. I also want to investigate integrating the project with

GradleRIO somehow to make it easy for others to download and build it and its dependencies. First, though, I thought I should publicly demonstrate some of the framework's capabilities and give an example lesson based on it that starts with basic driving and builds up to following a line drawn on a flat surface.

Driving Forward

The first thing to do is to just make the robot move using its drive motors. In this example, the robot will drive forward by applying an equal amount of power to both motors when it's in Autonomous Mode. In Disabled Mode, it will stop the motors by setting the power to zero. The code to do so is below.

#include <WPILib.h>

class Robot : public frc::IterativeRobot

{

private:

RedBotSpeedController myLeftMotor;

RedBotSpeedController myRightMotor;

public:

Robot() :

myLeftMotor(0),

myRightMotor(1)

{

}

void AutonomousInit()

{

myLeftMotor.Set(0.6);

myRightMotor.Set(0.6);

}

void DisabledInit()

{

myLeftMotor.Set(0.0);

myRightMotor.Set(0.0);

}

};

START_ROBOT_CLASS(Robot);

Deploy this program to the robot and enable Autonomous Mode to start the robot driving forward. Be sure to keep a finger on the Disable button so the robot doesn't drive off the table.

Once the robot starts moving, it may not keep a completely straight course, even though equal amounts of power are specified for both motors in the code. This will be addressed in later sections of this tutorial.

Breaking down the Code

Here's an in-depth analysis of the whole code.

#include <WPILib.h>

This includes the code libraries needed to make the robot perform actions as well as to retrieve data from the robot. In this example, we use the speed controller class defined in these libraries to set motor power. All programs for the robot need to begin with this line.

class Robot : public frc::IterativeRobot

{

This begins the robot class, which contains variables for robot parts and methods that perform actions with the variables. There are two main parts to this class declaration: the name of our class, which is simply "Robot", and the public derivation from the base class "frc::IterativeRobot". The Robot class derives from frc::IterativeRobot so that it can use the methods and variables already defined in it. Deriving from this class is also required for every robot program.

private:

RedBotSpeedController myLeftMotor;

RedBotSpeedController myRightMotor;

Here, the speed controller variables are declared inside the Robot class. The RedBotSpeedController class represents a controller for the drive motors on the robot. The main purpose of a speed controller in this program is to specify how much power should be applied to its motor. There are two objects of this class in our program: one for the left motor, and one for the right motor. These objects are declared in the "private" section of the Robot class so that nothing outside the Robot class can access them.

public:

Robot() :

myLeftMotor(0),

myRightMotor(1)

{

}

Now the "public" section of the Robot class is started. In this section, methods and variables are accessible by code outside the class. The first thing defined here is the Robot class constructor, which is a special method that runs whenever a new object of the Robot class is created. The only thing this constructor does is to construct the speed controller objects that we declared above. The speed controller constructors require a single numerical argument that specifies the channel on the main control board that they're connected to. In every robot program, the left controller must always be constructed with channel 0, and the right controller must always be constructed with channel 1. This ensures that whatever speed is set for the controller in the following code is applied to the correct physical motor.

void AutonomousInit()

{

myLeftMotor.Set(0.6);

myRightMotor.Set(0.6);

}

This is the code that makes the robot move when Autonomous Mode is enabled. The method AutonomousInit is inherited from frc::IterativeRobot (from which this Robot class is derived from, as explained above) and is called once whenever the robot switches to Autonomous Mode from Disabled Mode. In the method's body, the speed controller objects are used to set a speed of 0.6 on both motors. Since 0.6 is a positive number, this will cause the robot to drive forward (a negative number would cause the robot to drive backwards). The robot will continue driving forward at this speed until a new speed is set on the controllers.

void DisabledInit()

{

myLeftMotor.Set(0.0);

myRightMotor.Set(0.0);

}

};

To stop the robot when switching to Disabled Mode from Autonomous Mode, zero speed is set for both motors. This causes the motors to stop immediately; no coasting should occur. Just like the AutonomousInit method, the DisabledInit method is inherited from frc::IterativeRobot and runs once whenever the robot is disabled (as well as when the robot program first starts up). The curly brace and semicolon ("};") following the DisabledInit method conclude the Robot class.

START_ROBOT_CLASS(Robot);

Finally, this macro call specifies that the Robot class defined above should be used as the main program for the robot. Again, this line is required for all robot programs.

Turning

Try changing the motor speed values in the above program to make the robot turn rather than drive forward. Which values are needed to make it turn left, and which make it turn right? Which values make the robot turn about its center, and which make it turn about one side?

Using Timers

Instead of driving the robot forever or until it falls off the end of the table or until the Disable button is pressed, it may be helpful to use a timer to figure out when to stop. For this purpose, the Timer class comes in handy. An example of how to use it is shown below.

#include <WPILib.h>

class Robot : public frc::IterativeRobot

{

private:

RedBotSpeedController myLeftMotor;

RedBotSpeedController myRightMotor;

enum DriveState { FORWARD, STOP_FORWARD, BACKWARD, STOP_BACKWARD };

DriveState myState;

frc::Timer myTimer;

public:

Robot() :

myLeftMotor(0),

myRightMotor(1)

{

}

void AutonomousInit()

{

myTimer.Stop();

myTimer.Reset();

myTimer.Start();

myLeftMotor.Set(0.6);

myRightMotor.Set(0.6);

}

void AutonomousPeriodic()

{

if (myTimer.HasPeriodPassed(2.0) == false)

{

return;

}

double speed = 0.0;

switch (myState)

{

case FORWARD:

speed = 0.0;

myState = STOP_FORWARD;

break;

case STOP_FORWARD:

speed = -0.6;

myState = BACKWARD;

break;

case BACKWARD:

speed = 0.0;

myState = STOP_BACKWARD;

break;

case STOP_BACKWARD:

speed = 0.6;

myState = FORWARD;

break;

};

myTimer.Stop();

myTimer.Reset();

myTimer.Start();

myLeftMotor.Set(speed);

myRightMotor.Set(speed);

}

void DisabledInit()

{

myLeftMotor.Set(0.0);

myRightMotor.Set(0.0);

}

};

START_ROBOT_CLASS(Robot);

Just as in the first example, build and deploy this program and enable Autonomous Mode to move the robot. The robot should drive forward for 3 seconds, stop for 1 seconds, drive backward for 3 seconds, and repeat.

Breaking Down the Code

This program is a little more complicated not only because it uses a Timer object, but because it also has a state machine to govern the robot's movement. A state machine is a useful coding pattern whenever the robot has to go through a sequence of steps. It can usually be written using a state variable and a switch statement.

class Robot : public frc::IterativeRobot

{

private:

RedBotSpeedController myLeftMotor;

RedBotSpeedController myRightMotor;

enum DriveState { FORWARD, STOP_FORWARD, BACKWARD, STOP_BACKWARD };

DriveState myState;

frc::Timer myTimer;

Just as in the first example, the two speed controllers are declared using RedBotSpeedController objects. Following those, an enum (short for "enumeration") is declared to list all of the possible states that the robot can be in: moving forward, stopping in the forward position, moving backward, and stopping in the backward position. In this program, the robot is meant to cycle through all of these states in the order shown above, using the timer to remain in each state for a certain amount of time. The current state variable myState is declared as a type of the same name as the enum (DriveState). Finally, the timer itself is declared as an object of frc::Timer.

public:

Robot() :

myLeftMotor(0),

myRightMotor(1)

{

}

The constructor here initializes both speed controllers. Since the state variable and timer object do not need to be constructed with an argument, they are not listed here.

void AutonomousInit()

{

myTimer.Stop();

myTimer.Reset();

myTimer.Start();

myState = FORWARD;

myLeftMotor.Set(0.6);

myRightMotor.Set(0.6);

}

The AutonomousInit method now has more code in it to set up the timer and state to begin the Autonomous mode. Since the Timer object automatically starts counting from the time that the robot program begins, it must be stopped, reset to zero, and started again every time Autonomous mode is enabled. Following that, the robot state is initialized to FORWARD, meaning that the robot should start driving forward when switching to Autonomous mode. To make that actually happen, a positive speed is set for both motors in the final two lines of this method.

void AutonomousPeriodic()

{

if (myTimer.HasPeriodPassed(2.0) == false)

{

return;

}

The AutonomousPeriodic method is run repeatedly for as long as the robot is in Autonomous, as opposed to the AutonomousInit method, which runs just once right when the mode is enabled. The first thing to do in this method is to check the timer. If it has not yet counted past 2 seconds, then it returns immediately; nothing else in this method is executed. When the timer does count 2 seconds, then the program will continue on to the following lines.

double speed = 0.0;

switch (myState)

{

case FORWARD:

speed = 0.0;

myState = STOP_FORWARD;

break;

case STOP_FORWARD:

speed = -0.6;

myState = BACKWARD;

break;

case BACKWARD:

speed = 0.0;

myState = STOP_BACKWARD;

break;

case STOP_BACKWARD:

speed = 0.6;

myState = FORWARD;

break;

};

Here is the main code for the state machine mentioned previously. Every two seconds while the robot is in Autonomous mode, this switch statement will check the current state and decide the next state to switch to and change the motor speed at the same time. For example, since the robot begins in the FORWARD state, two seconds after starting Autonomous mode, it will change to the state STOP_FORWARD and set the speed to zero. Two seconds later, it will change to BACKWARD and change the speed to -0.6. Eventually it will reach the FORWARD state again and cycle between driving forward, stopping, driving backward, and stopping until the robot is disabled.

myTimer.Stop();

myTimer.Reset();

myTimer.Start();

myLeftMotor.Set(speed);

myRightMotor.Set(speed);

These lines following the switch statement reset the timer every two seconds and apply the new speed to the speed controllers.

void DisabledInit()

{

myLeftMotor.Set(0.0);

myRightMotor.Set(0.0);

}

Just as before, the robot should stop whenever it's disabled.

Detecting a Line

In addition to driving, programs can also read data from sensors connected to the robot. For example, infrared sensors can be used to detect if a nearby object is light or dark in color. When a voltage is applied to the supply input of the sensor, the voltage that it returns varies depending upon the amount of light that is reflected into its receiver: the voltage is high when it receives less light, and low when it receives more light.

Attach three infrared sensors to the bottom of the front of the robot like in the picture below. Be sure that they are facing down and are within a couple centimeters of the table surface (also ensure they don't actually touch the surface).

Wire the sensors to the analog inputs 3, 6, and 7 on the control board as shown below.

The following code can be used to continuously read values from the sensors and display them on the SmartDashboard.

#include <WPILib.h>

class Robot : public frc::IterativeRobot

{

private:

frc::AnalogInput myLeftSensor;

frc::AnalogInput myMiddleSensor;

frc::AnalogInput myRightSensor;

public:

Robot() :

myLeftSensor(3),

myMiddleSensor(6),

myRightSensor(7)

{

frc::SmartDashboard::init();

}

void DisabledInit()

{

}

void AutonomousInit()

{

}

void AutonomousPeriodic()

{

frc::SmartDashboard::PutNumber("Left Sensor", myLeftSensor.Get());

frc::SmartDashboard::PutNumber("Middle Sensor", myMiddleSensor.Get());

frc::SmartDashboard::PutNumber("Right Sensor", myRightSensor.Get());

}

};

START_ROBOT_CLASS(Robot);

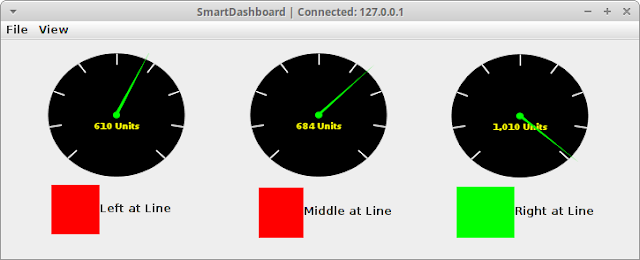

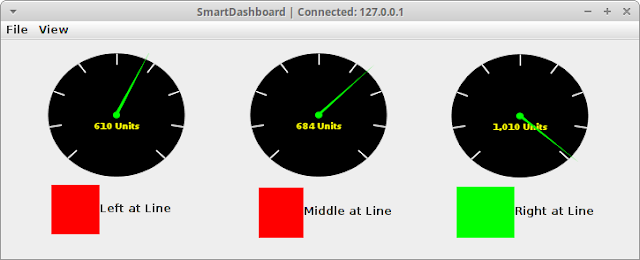

Build and deploy this program to the robot and enable Autonomous mode. Then, start up SmartDashboard (make sure that it's using the server at localhost or 127.0.0.1). There should be three number fields visible. Change the fields to dials, and the SmartDashboard should look something like the screenshot below.

Breaking Down the Code

Sensors can be used in code much like how speed controllers were used in the previous examples. The first step is to declare them as variables in the Robot class:

class Robot : public frc::IterativeRobot

{

private:

frc::AnalogInput myLeftSensor;

frc::AnalogInput myMiddleSensor;

frc::AnalogInput myRightSensor;

The three infrared sensors are declared as analog sensors because they return numeric, non-binary values; the possible values range from 0 to 1023. If a sensor could only return either a 0 or a 1, then it would be declared as a digital sensor.

Robot() :

myLeftSensor(3),

myMiddleSensor(6),

myRightSensor(7)

{

frc::SmartDashboard::init();

}

Just like speed controllers, sensors have to be constructed with the numbers of the control board port they're connected to. Also in this constructor is an initialization call for the SmartDashboard. This is needed to be able to send and receive data from the SmartDashboard later in the robot program.

void DisabledInit()

{

}

void AutonomousInit()

{

}

Notice how both the DisabledInit() and AutonomousInit() methods are both empty in this new program. That's because there is no nothing to do just once whenever the robot changes modes. Instead, the sensors must be read continuously in the AutomousPeriodic method below.

void AutonomousPeriodic()

{

frc::SmartDashboard::PutNumber("Left Sensor", myLeftSensor.Get());

frc::SmartDashboard::PutNumber("Middle Sensor", myMiddleSensor.Get());

frc::SmartDashboard::PutNumber("Right Sensor", myRightSensor.Get());

}

Every time this periodic method runs, all three infrared sensors are read, and their current values are put on the SmartDashboard using the PutNumber function. This function takes a label that describes what the data is and the current value that should be shown next to that label. For different types of data (other than numbers) that must be sent to the SmartDashboard, the PutBoolean() and PutString() functions are also available.

Detecting a Line

With the above sensor program running on the robot and SmartDashboard running on the driver station, manually move the robot so that one of the sensors is above a dark surface and the others above a light surface. How do the sensor readings change? Repeat this test for each of the three sensors. Do they all change to the same values? Are the readings affected by the ambient light in the room?

For the later activities, it will be important to determine if a sensor is above a dark line drawn on a white surface. That means that the analog sensor value needs to be converted to a digital value: 0 (false) for being off a line and 1 (true) for being on a line. Write a new method to perform this conversion, and use it to publish the digital values to the SmartDashboard. The SmartDashboard should eventually look like the screenshot below.

Following a Straight Line

Most two-motor robots are often unable to keep a straight path just by applying equal power to both sides for very long. As shown in the first experiment, the robot soon veers off to one side or zig-zags from side to side. This is caused by several factors, including imperfections in the drivetrain, deformities in the driving surface, and unequal distribution of electrical power to the motors.

Feedback from sensors can be used to overcome these obstacles. In this activity, a thick, straight black line on the surface will serve to guide the robot on the correct course. Using the skills learned in the previous examples, write a robot program that automatically adjusts the power to the motors depending on which of the infrared sensors see or don't see the line. For example, if the left sensor does not see the line, but the middle and right ones do, which way should the robot turn? How quickly should it turn? What should each of the motors' speeds be to accomplish that turn?

As a suggestion, begin with low cruising speed for the motors. This will make it easier to judge if the robot is seeing and following the line correctly and to catch it if it becomes lost. Also, it may help to either log the sensor readings and other program variables to a file on the driver station or continuously publish them to the SmartDashboard, or both. Keep in mind that values can also be read from the SmartDashboard; this makes it very easy to quickly try out different sets of constants for tuning a program without having to recompile and restart the robot.

Following a Line With a Turn

Once the robot can follow a straight line, the final step is to handle a sharp turn in the line of at least 90 degrees. Any misstep in the program at the wrong moment can now throw the robot completely off the line and cause it to become lost.

One approach to this problem is to augment the sensor-feedback-drive loop with some special logic for when the robot arrives at the turn. This code could cause the robot to follow a specific sequence of steps to get it to force itself through the turn and continue onto the next straight segment. Remember that a state machine, like the one described in the timed driving example above, can be used to encode these steps in the program.